(Note: this page needs some update :), see publications for published research projects)

Eliciting Dance Learning Schemes through Technology Probe

We are interested in supporting motor skill acquisition in highly creative skilled practices such as dance. We conducted semi-structured interviews with 11 professional dancers to better understand how they learn new dance movements. We found that each dancer engages in a set of operations, includ ing imitation, segmentation, marking, or applying movement variations. We also found a progression in learning dance movements composed of three steps consistently reported by dancers: analysis, integration and personalization. In this study, we aim to provide an empirical foundation to better understand the acquisition of dance movements from the perspective of the learner.

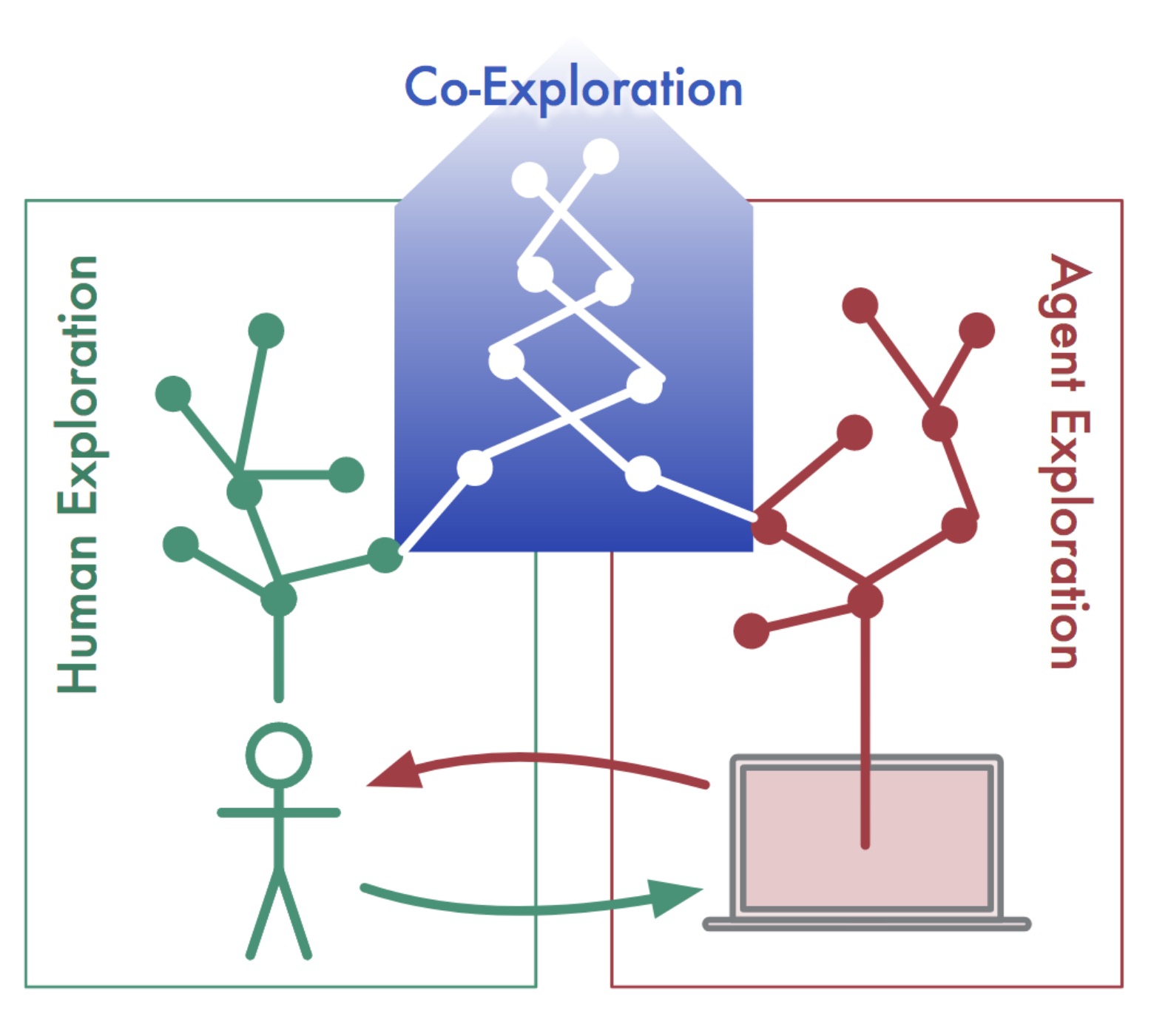

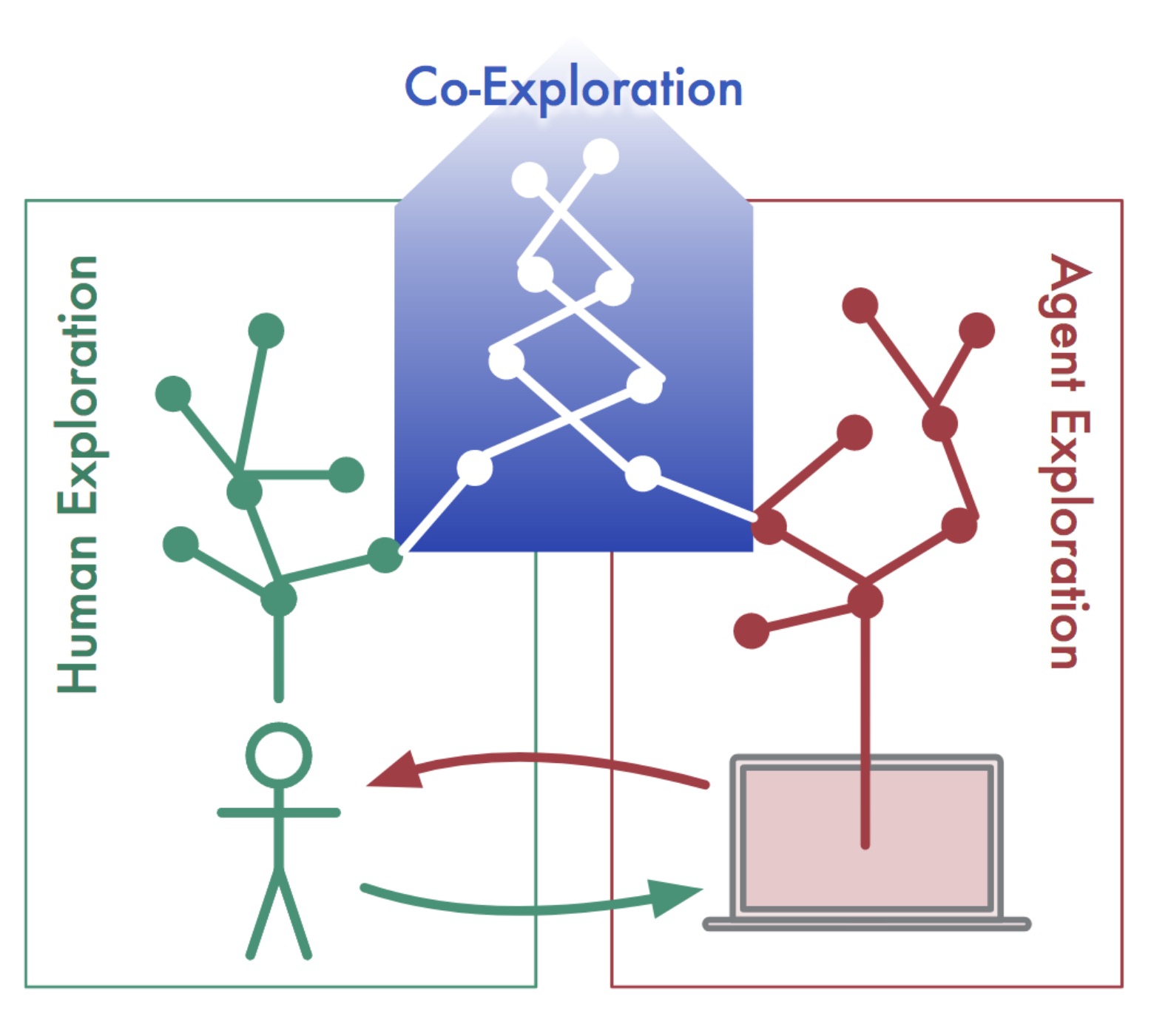

Sound Design with Interactive Reinforcement Learning

The goal is to allow humans to explore musical environments by communicating feedback to an artificial agent. The system implemened is based on an interactive reinforcement learning workflow, which enables agents to incrementally learn how to act on an environment by balancing exploitation of human feedback knowledge and exploration of new musical content. We performed a controlled experiment, participants successfully interacted with these agents to reach a sonic goal in two cases of different complexities. Subjective evaluations suggest that the exploration path taken by agents, rather than the fact of reaching a goal, may be critical to how agents are perceived as collaborative. We discuss such quantitative and qualitative results and identify future research directions toward deploying our “co-exploration” approach in real-world contexts.

Role of tempo Variability on skill learning

This project aims to better understand the role of variability in complex skill acquisition. Moving efficiently and at the right time is important in complex human skills such as music performance. The acquisition of such motor skills remains largely unexplored, especially the role of learning schedules in acquisition of timed motor skills. Varied learning schedules are known to facilitate transfer to other motor tasks, and retention of a learned task in spatial movements. Facilitation of transfer of learning through variability in learning schedules has been reported as evidence of structure learning in visuomotor tasks. In this project, we examined the effects of a variable tempo learning schedule on timing skill acquisition with non-musician participants who learned an 8-note sequence on a piano keyboard.

PLoS ONE article [pdf]

PLoS ONE article [pdf]

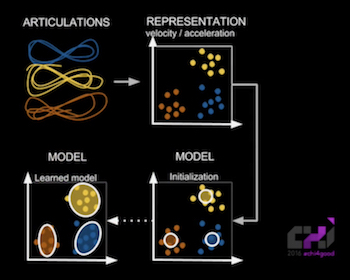

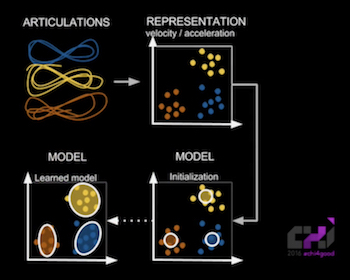

LEARNING PERSONAL GESTURE VARIATION IN MUSIC Conducting

This work presents a system that learns expressive and idiosyncratic gesture variations from gestural demonstrations provided by a user. From these variation examples, the model builds a regression model. The user can then explore the variation space by performing the same ones or combinations of variations. To illustrate the technique, the system is used as an interaction technique in a music conducting scenario where gesture variations drive music articulation. The machine learning model is based on Gaussian Mixture Modeling (GMM). We evaluated the system performance in a user study as well as the influence of the user musical expertise. Results show that 1) the model is able to learn idiosyncratic variations provided by each user, 2) users are able to control musical articulations through gestural variations, 3) users with musical expertise get better performance, and 4) all users improve through practice.

CHI 2016 article [pdf]

CHI 2016 article [pdf]

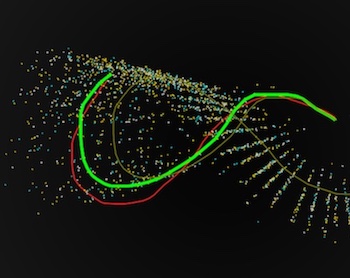

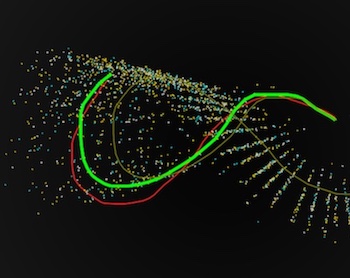

GESTURE VARIATION FOLLOWING (GVF): ALGORITHM AND APPLICATION

The algorithm allows for early recognition of gestures (i.e. recognizing a gesture as soon as it starts) and allows for estimating gesture variation (such as speed, scale, orientation, …) of a live gesture according to the learned (pre-recorded) templates.

The algorithm has been developed in the context of expressive continuous control of digital media at IRCAM Centre Pompidou (by Baptiste Caramiaux, Nicola Montecchio, Frédéric Bevilacqua) and interfaced in various interfaces (MaxMSP, pure data, openFrameworks) at Goldsmiths (by Baptiste Caramiaux, Igor Correia, Matthew Gingold, Alvaro Sarasua) under the MGM project.

Gesture expressivity and muscle sensing

Motivated by the understanding of gesture expressivity, complementary to our previous works working with shape gestures and free-air gestures, we aim to go further in the idea that expressivity is a visceral capacity of the human body. As such, to understand what makes a gesture expressive, we need to consider not only its spatial placement and orientation, but also its dynamics and the mechanisms enacting them. Our approach in this project is to assess gesture expressivity through muscle sensing. By that we want to propose new ways in to consider expressive and visceral Human-Computer Interaction. The project is based on, first, our previous study on gesture expressive variations for continuous interaction, and second, our pilot studies on muscle sensing for musical interaction. The former gave insights on the motor capacity of human to intentionally control variations of simple shapes. The latter went further by inspecting how multimodal muscle sensing can inform on an expressive performance and can be controlled by a performer.

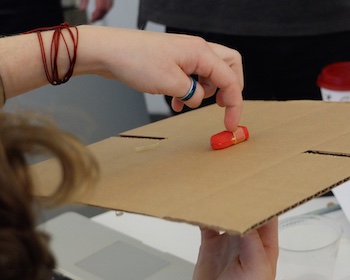

Form Follows Sound: Workshops on Sonic Interaction Design

Sonic interaction is the continuous relationship between user actions and sound, mediated by some technology. Interaction with sound may be task oriented or experience-based. Understanding the nature of action–sound relationships is an important aspect of designing rich sonic interactions.

We propose a

user-centric approach to sonic interaction design that first considers the affordances of sounds in order to imagine embodied interaction, and based on this, generates interaction models for interaction designers wishing to work with sound.

To do so, we designed, together with

Alessandro Altavilla and

Atau Tanaka a series of

workshops where participants ideate imagined sonic interactions, and then realise working interactive sound prototypes. From these workshops we aim to find

interaction models that could be used by interaction designers to create innovative action-based sonic interaction. We carried out the workshop 4 times in 4 different places: New York (a two-day workshop at Parsons The New School for Design), Paris (two-day workshop at IRCAM Centre Pompidou), London (one-day workshop at Goldsmiths College), and Zurich (one-day workshop at the ZHdK Academy of Arts).

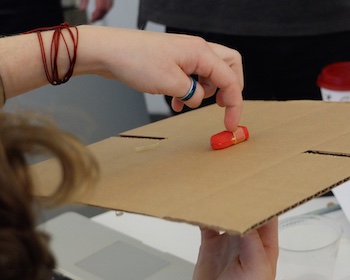

The workshops starts by an ideation phase. The goal of this first phase is to generate ideas for action– sound relationships based on memories of sounds from participants' everyday lives. This phase does not involve technology. This first phase is followed by a realisation phase that brings the participants to the realisation of working technology prototypes of the imagined sonic interactions. We created breakout groups to create a group dynamic of mutual negotiation, teaching and understanding. Each group selected one imagined scenario from the set of sonic incidents described in the ideation phase. We provided a technological toolkit to realise a wide range of gestural sound interactions. The toolkit is an open project available on Github (see below).

The workshops starts by an ideation phase. The goal of this first phase is to generate ideas for action– sound relationships based on memories of sounds from participants' everyday lives. This phase does not involve technology. This first phase is followed by a realisation phase that brings the participants to the realisation of working technology prototypes of the imagined sonic interactions. We created breakout groups to create a group dynamic of mutual negotiation, teaching and understanding. Each group selected one imagined scenario from the set of sonic incidents described in the ideation phase. We provided a technological toolkit to realise a wide range of gestural sound interactions. The toolkit is an open project available on Github (see below).

ROLE OF SOUND PERCEPTION IN GESTURAL SOUND DESCRIPTION

There is increasing evidence that our capacity of moving, performing gesture and actions, while listening to sounds (and music), is linked to our perception sound. It is a form of Embodied Sound Cognition. Recent studies in cognitive neuroscience have shed light on interaction between the motor system and auditory system. Here we want to investigate how gesture and sound are related from a behavioural point of view.

We investigate the gesture responses to sound stimuli whose causal action can be identified or not. We aim at providing behavioral issues on gesture control of sound according to their causal uncertainty permitting the design of new sonic interaction to be enhanced.

To that extent, we present a first experiment conducted to build two corpuses of sounds. The first corpus contains sounds related to identifiable causal actions. The second contains sounds for which causal actions cannot be identified. Then, we present a second experiment where participants had to associate a gesture to sounds from both corpuses. Also they had to verbalize their perception of the listened sounds as well as their gestural strategies by watching the performance videos during an interview. We show that participants mainly mimic the action that has produced the sound when the sound causal action can be identified. On the other hand, participants trace the acoustic contours of timbral features when no action can be associated as the sound cause. In addition, we show from gesture data that the inter-participants gesture variability is higher if considering causal sounds rather than non-causal sounds. Variability demonstrates that in the first case, people have several way to produce the same action whereas in the second case, the common reference is the sound stabilizing the gesture responses.

Movement qualities as Interaction modality

We explore the use of movement qualities as interaction modality. The notion of movement qualities is widely used in dance practice and can be understood as how the movement is performed, independently of its specific trajectory in space. We implemented our approach in the context of an artistic installation called A light touch. This installation invites the participant to interact with a moving light spot reacting to the hand movement qualities. We conducted a user experiment that showed that such an interaction based on movement qualities tends to enhance the user experience favoring explorative and expressive usage.

Movement qualities are defined as movement behaviors described as configurations of a given dynamical system. The goal of the recognition is to estimate the parameters of the movement's dynamics and classify these dynamics into pre-defined behaviors. The three behaviors considered in this study are: oscillatory, dumped and oscillatory, critically dumped.

Finally we implemented an interaction technique based on movement qualities in the context of a large-scale artistic installation called A light touch. Our installation allows the participant to experience the MQ interaction by interacting with their hand’s movement quality inside of an empty frame and controlling a horizontal light spot projected in a rear surface.

In order to evaluate how users experience the Movement Qualities interaction in

A light Touch, we invited participants to explore the installation according to a given protocol based on user experience evaluation methodology.